Posted on Saturday, 20th February 2010 by Michael

Detecting Malware and other malicious files using md5 hashes

The initial interest for this research came to me after reading an article on this on the site http://enclavesecurity.com/ . In the article they talk about using the malicious hashes to discover malware and other malicious files on their systems. They also take a deeper look into the recent APT and Auroa attacks on Google. Though the thing I found most interesting is trying to develop a way to automate this process for free and provide usable information.

The biggest thing to understand before continuing on is that this is not a fool proof process as a simple change of the file will change the hash of the file. For example if you have the c99.php shell and change the password or add a white space to the php this will change the hash of the file hence making detection via this method impossible. The other issue I have noticed in using this methodology is no one is willing to share all the information. Many companies will only share bits and pieces such as “The Malware Hash Registry” (http://www.team-cymru.org) considered the leading authority on this topic. They make part of their service available online to submit hashes to and get back the following information:

Ex:1: 7697561ccbbdd1661c25c86762117613 1258054790 NO_DATA

Ex:2: cbed16069043a0bf3c92fff9a99cccdc 1231802137 69

In example 1 you see the md5 hash then the epoch date and time then NO_Data meaning it could not tell if this hash is malicious. In example 2 you see the same except instead of NO_data you see 69. This number means that 69% of the Antivirus vendors they used to check this file with found it to be malicious. This info is good but I find it to be not very helpful. It is nice to know that it was detected as malicious but is it truly malicious and if it is what type of malicious file is it, is it a backdoor, key logger or so on. I have emailed them asking if they could provide the detection type; with understanding that most of their system is private as they will not disclose the database or the vendors they use to scan the files. Though I have not heard back from them at this point.

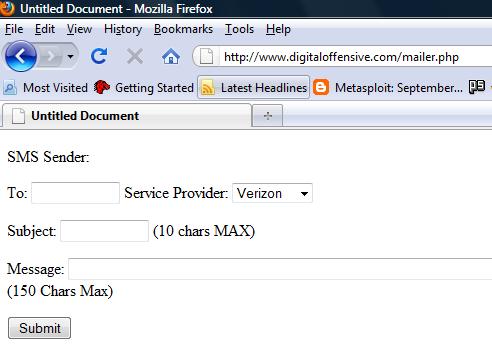

This led me to searching the internet for other sites like this that provided additional information along with the hash. In this search I found one other site called http://malwarehash.com a sub site of the company NoVirusThanks.org. They provide an online utility to submit your hash to and if it is discovered as malicious it will give you info back. See screen shot below:

As you can see they provide an additional layer over what you get from the Malware Hash Registry. On top of that they use a simple PHP script for the query that makes scripting this so much easier:

http://www.malwarehash.com/result.php?hash=1E71DE2D6A89AA9796344BB7FA23AC7E

As you can see in the URL you have the site the script and the hash. The only issue with this site is that it seems they have not updated their database since 6/2009. I have contacted them as well to ask them about this and to see what their plans are for the site though I have not heard back from them either.

With this information in hand I set forth to develop a script that would allow me to automate this process as we have found this methodology to be helpful at work even if it is not 100% accurate as we notice that most malware will not get detected by our Anti virus so by using the hashes and relying on the internet community we are able to help our detection and remediation of malicious files.

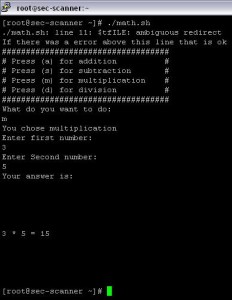

To use this script you will need to have a Linux user account and some basic knowledge of Linux to set the variables properly. I wrote the script in bash for two reasons 1 it is a piece of cake to do and 2 so you be forced to move the malicious file off a windows environment where you stand a higher chance of infecting your self. First access your shell and create a directory called what ever you want but in the code we used a directory called infect that is set in a variable for easy changing. Once you do that copy the malware-hash.sh script to 1 directory above the folder you just created. Then copy the sed script file to a file called clean in the directory that you created. Once you have done this chmod the malware-hash.sh script so you can execute it and chmod the clean script so the malware-hash.sh script can read it. Once done all you have to do now is copy the suspicious files to the directory you created and execute the script. The script will get a listing of all the files in that folder, remove the clean script, and any dupes from the listing and then get the md5 hash of each file. Once it gets the hashes it will create a batch file to be processed against The Malware Hash Registry and save the results in a clean human readable format. We use the batch function to stay with in the TOS of the site. This includes adding the file names in front of the hash so you know what the hash belongs to. Next it will take the hashes and run them through the site Malwarehash.com. We use the –random-wait command with wget here to not act like a bot or script. If it gets a hit for a infection we will grab the site and scrape out the data we want then process it into a human readable report. Once all done we will combine the results of both checks and email the final results to the email address provided.

Posted in Code | Comments (4)